AI Videos/ Experimentations

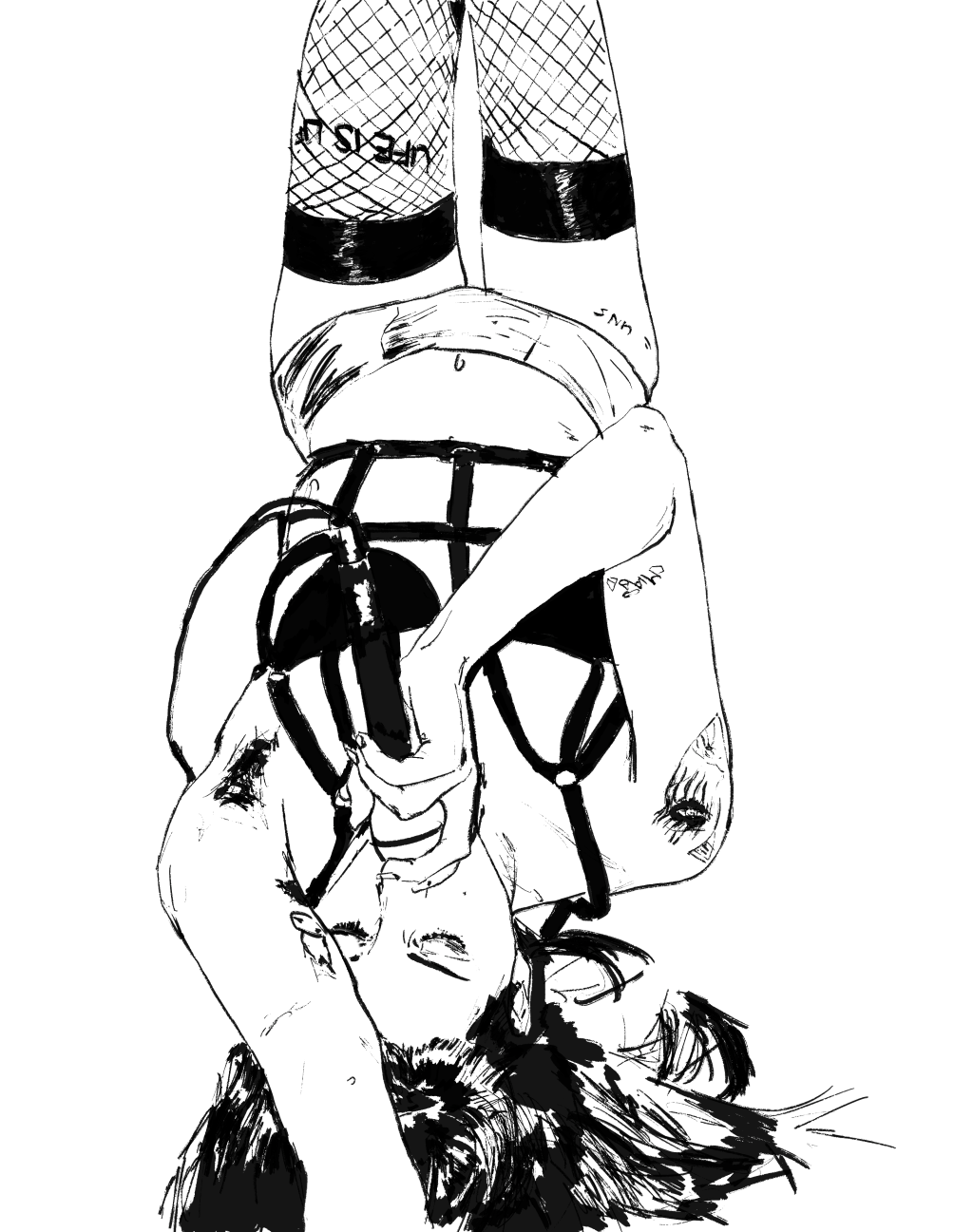

Qwen-Image-Edit-2511 LoRA Training Based on my digital sketches:

Since I began tracing images with my Ipad in 2020 I figured I could put together a decent dataset consisting of pairs of the actual photos as input and your traces as the output. In preparation for training, I made sure the resolutions of input and output images are identical and there is no shift between them on either axis. The trained model should then be able to convert new input photos into my unique style tracing and capturing the world from digital images with my hands.

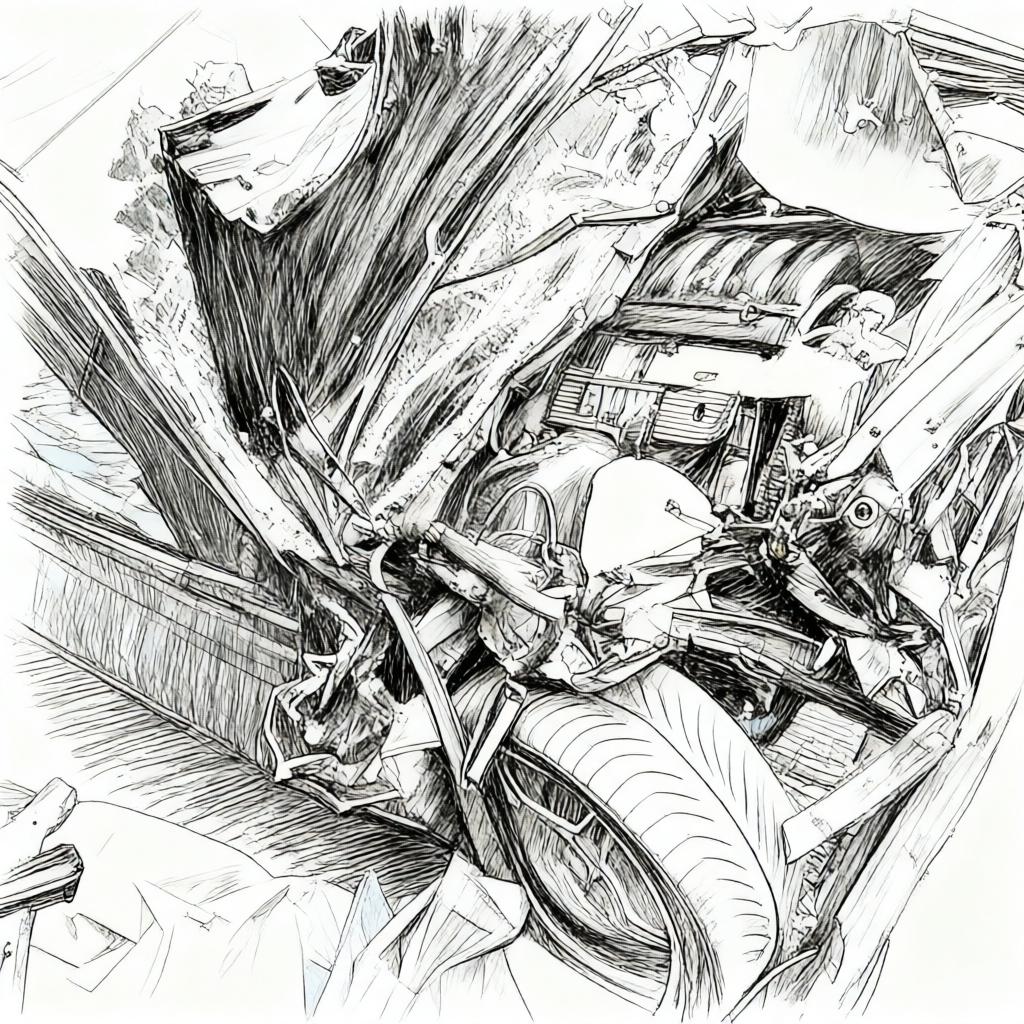

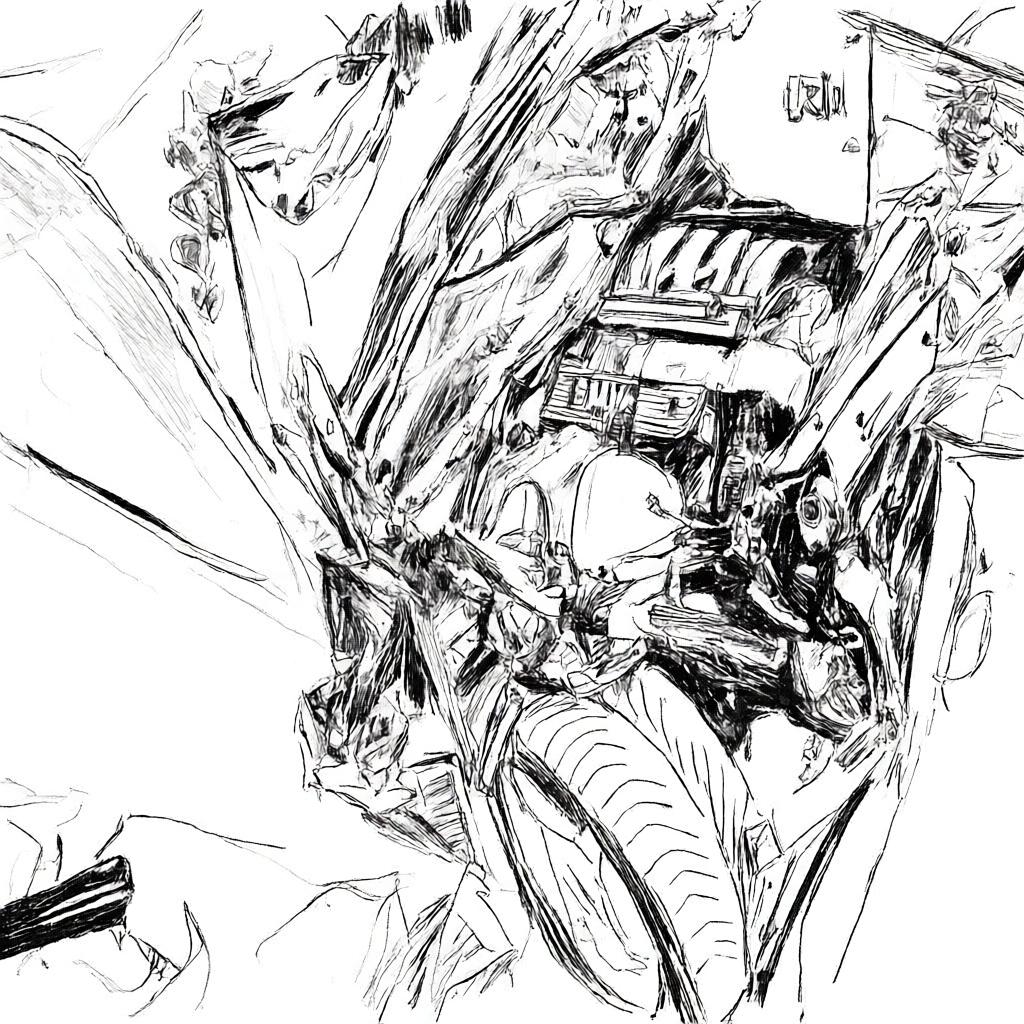

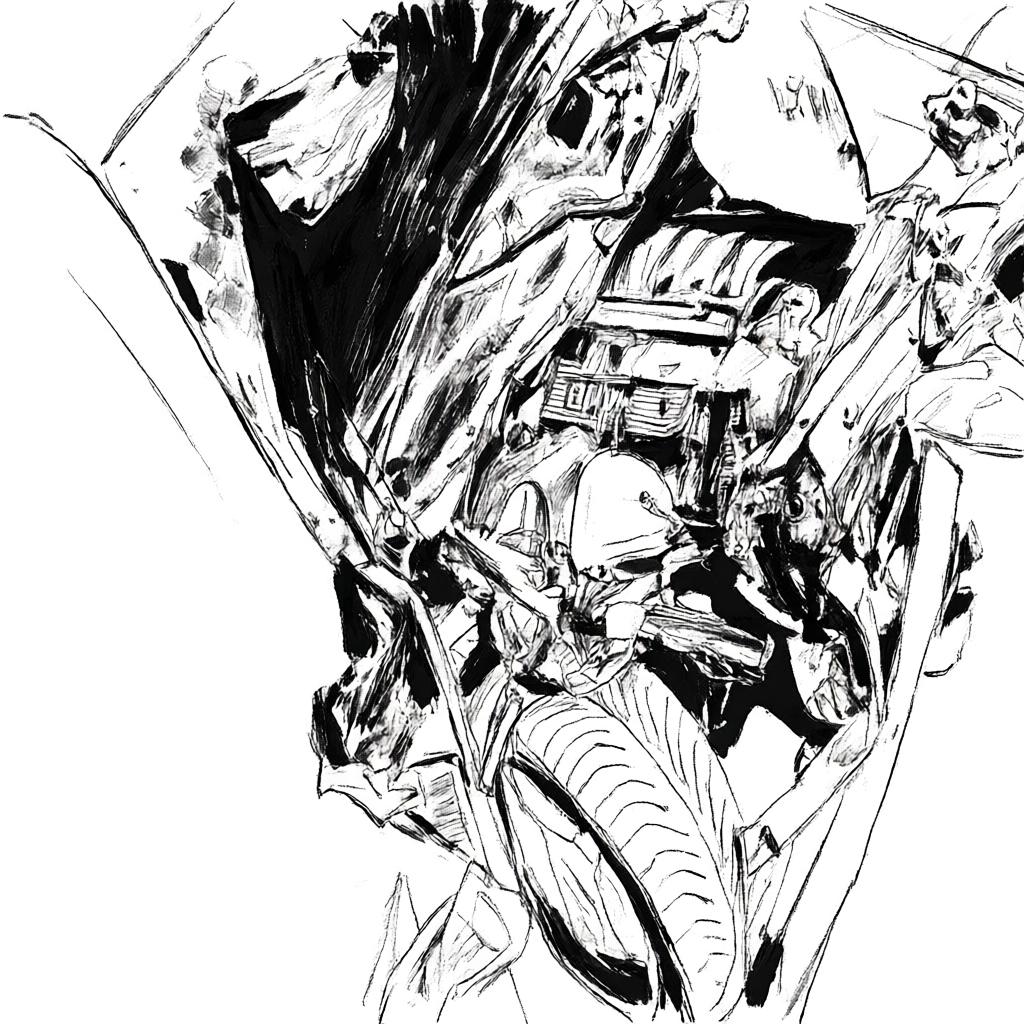

This is how the training went, from a generic pencil sketch to my way of sketching digitally:

As a control test- the image on the left is AI generated from my lora trained on 63 sketches. On the right is the same handrawn image not use in the training data:

Wan 2.2 Videos:

Takes a minute to load

Stable Diffusion - Deforum

Cadence : 3 , Anti-blur : 1

Using the model "Lyriel_v15"

Testing new motion settings, sync with the scenes of the prompt, and 'anti-blur'.

"15": "The single cell evolves into a jellyfish floating gracefully. It then transforms into a fish, followed by a taphole, a frog, a turtle, and a hummingbird made from holographic light collapses into a diamond cocoon the evolution of life culminates with the hatching of the Geode egg. Full HD resolution, photorealistic visuals, ultra-detailed rendering. Art by Ernst Haeckel and Charles R. Knigh --neg"

Putting different videos in your CNs _and_ HybridVideo makes for some cool effects. No translation_z here: all negative flow in Hybrid // Used 80 steps and CFG 12, i also switched the sampler to dpm++ 2S a, and change the run type to 3D and turned of depth warping, and i used a Cadence setting of 9, and strength up to 0.7